Extracting Eye Region with MediaPipe Face Landmarks

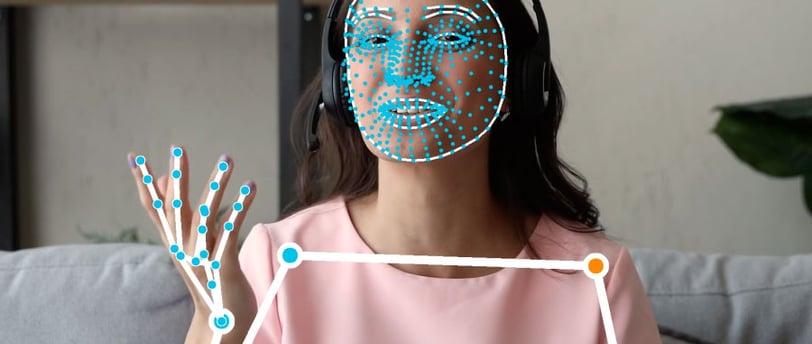

In this blog post, we explore the topic of face landmarks and how to use Google's MediaPipe library to detect and track facial features in images and videos.

MACHINE LEARNING

3/4/20234 min read

What is mediapipe?

Mediapipe is a cross-platform, open-source framework developed by Google that enables developers to build real-time computer vision applications. It provides a wide range of pre-built models and tools, making it easy for developers to build custom computer vision pipelines without needing to write complex code from scratch. One of the most popular features of Mediapipe is its face mesh model, which can identify and track facial landmarks in real time, making it useful for a variety of applications such as facial recognition and augmented reality. With its ease of use and robust features, Mediapipe is a powerful tool for anyone looking to build computer vision applications. Mediapipe is a flexible framework that supports multiple programming languages, including C++, Python, Java, and JavaScript. This enables developers to choose the language that they are most comfortable with and integrate Mediapipe into their existing workflows and projects with ease. Additionally, Mediapipe provides comprehensive documentation and tutorials for each language, making it easier for developers to get started regardless of their skill level; in this post, we are going to use Python.

Let's dive in

First thing: we need to install mediapipe, which is as simple as typing: pip install mediapipe. Then in our Python application, we have to instantiate the face_mesh object:

static_image_mode is a Boolean parameter that is set to True by default, indicating that the input is a single static image. If you are processing a video stream, you can set this parameter to False, I am setting it to True even if I am using it in a video setting because am not interested in better performance at this stage. max_num_faces is an integer parameter that specifies the maximum number of faces to detect in the input. In this example, it is set to 1 to detect only a single face. refine_landmarks is a Boolean parameter that is set to False by default, indicating that additional landmarks beyond those needed for eye extraction are not needed. If you need more precise facial landmarks, you can set this parameter to True. min_detection_confidence is a float parameter that sets the minimum confidence threshold for face detection. Faces with a confidence score below this threshold will not be detected. In this example, the threshold is set to 0.5. Now, we are already ready to process images, which would be as simple as:

Kindly note that the image must be passed in RGB format. Once you execute the 'process' function, you will receive an object called 'multi_face_landmarks' as output. In case the value of 'multi_face_landmarks' is 'None,' it indicates that the processing was not successful, for example, if no faces were detected. Therefore, you should handle this situation accordingly:

if that is not None, it will contain a list of landmarks (one for each detected face). In our case, it's going to be just one face, so we can safely get it like this:

Each face is represented as a list of 468 landmarks. Each landmark is defined by its x, y, and z coordinates, with x and y normalized to the width and height of the image, respectively. The z coordinate represents the landmark depth, with smaller values indicating that the landmark is closer to the camera, and the magnitude of z uses a similar scale as x. The depth at the center of the head is used as the origin for z coordinates. To obtain the original pixel values for the x and y coordinates, you will need to reverse the normalization process by multiplying x by the width of the image and y by the height. This is a common operation when working with normalized image coordinates. This is the function I used for doing that:

The variables x and y represent normalized coordinates, whereas w and h denote width and height, respectively. After obtaining the list of facial landmarks from the face_mesh object, the next step is to extract the eye region from the input image. To do this, you can use the indexes corresponding to the eye landmarks, which can be found in the documentation provided by MediaPipe. Once you have the appropriate indexes, you can subset the original image and print the eye region:

Look at the complete example

You can find the all code in my Github account. As you can see in the code, I get a frame from the camera using open-cv, and I apply the operations we have seen here. I also try to keep the eye region aspect ratio within a threshold. Try it out and let me know if it does not work for you or if you are looking for something a bit different.

Real use-case applications

Extracting the eye region can have multiple use cases. In one of my recent projects, I faced the challenge of transforming the eye region in real-time to remove glasses. To solve this, I trained a Pix2Pix GAN model using TensorFlow (TF) in Python, and then utilized TensorFlow.js (TFJS) for inference in the browser. To optimize the performance of the model, I extracted only the relevant eye region and passed it to the model for processing, so I reduced the input image size. This approach improved the speed and efficiency of the model, enabling it to perform effectively in real-time and resulting in an enhanced user experience. Overall, the combination of TF and TFJS proved to be a powerful and versatile solution for my image processing needs. By leveraging the strengths of these tools, I was able to successfully overcome my challenges and achieve my project goals.

e.durso@ed7.engineering

Headquartered in Milan, Italy, with a strong presence in London and the ability to travel globally, I offer engineering services for clients around the world. Whether working remotely or on-site, my focus is on delivering exceptional results and exceeding expectations.