Introduction

In the first part of this series, we discussed the process of collecting and preparing the data needed to train a person detection model. Now that we have our dataset ready, it's time to dive into the process of training the model using TensorFlow and subsequently converting it to TensorFlow Lite (TFLite) for deployment on mobile and edge devices. In this second part, we will assume that the data is already available, and we will follow a reference notebook hosted on GitHub to walk you through each step.

Model Architecture selection

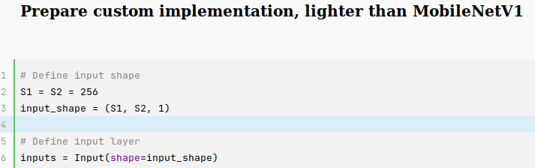

Considering the constraints of the deployment environment, resource-intensive models such as VGG16/19, Xception, and their counterparts are not suitable choices. A more fitting alternative is MobileNetV1, which offers a lightweight and efficient architecture specifically designed for mobile and edge devices. MobileNetV1 is a highly efficient convolutional neural network architecture designed specifically for mobile and embedded vision applications. It was introduced by Google researchers in 2017 as a lightweight alternative to more computationally intensive models like VGG and ResNet. The main innovation behind MobileNetV1 is the use of depthwise separable convolutions, which significantly reduce the number of parameters and computational complexity compared to traditional convolutional layers. Depthwise separable convolutions consist of two steps: depthwise convolutions and pointwise convolutions. In depthwise convolutions, each input channel is convolved with its own set of filters, while pointwise convolutions combine the outputs of depthwise convolutions using 1x1 convolutions. This results in a model that is smaller in size, faster to run and has lower memory requirements. You can easily download a MobileNet model (with weights), like this:

A simple quantization example

Let's try to make it even clearer, with a simple example, let's suppose we have a network with two fully connected layers, an input which is a scalar (1x1, for simplicity). We will have an input processing, to convert from float to integer, let's assume our input value is x = 2.0, and the input scale and offset are x_scale = 0.05 and x_offset = 100. We preprocess the input by converting it to an 8-bit integer using the scale and offset: x_quant = round((x / x_scale) + x_offset) = round((2.0 / 0.05) + 100) = 140 In the first forward pass, we perform the pure integer computation: y_quant = x_quant w_quant + b_quant. Where w_qaunt and b_quant are the quantized weights. Then we pass the output to a RELU, achieving: y1_quant_relu = max(y1_quant, zero_point) . The second forward pass is similar, but with different weights: y2_quant = y1_quant_relu * w2_quant + b2_quant. Then for the output layer, we can potentially go back to float, like this: y2 = y2_scale * (y2_quant - y2_offset).